Kubernetes Questions

some questions are missing answers, please get in touch

Why is terraform needed?

Why is the Terraform abstraction typically used to manage Kubernetes (k8s)? Why can't Kubernetes be the complete declarative model?!

I heard that https://github.com/kubernetes-sigs/cluster-api hopes to address this bootstrapping issue.

What's the point of scaling (replicas) of a pod on a single node?

Is it supposed to be some el cheapo form of concurrency?

Yes, this is especially applicable to Node.JS projects.

Why are Kubernetes app packages (aka helm charts) so very obtuse?

It makes the succinct PKGBUILD of Archlinux look absolutely ingenious.

helm can bundle all the resources at a particular version and deploy them all in one go. instead of helm you can literally just have your yaml in a dir and apply it in one go. helm provides a workflow for people who aren't so careful with maintaining the state of the declarative models in git.

Helm charts are very obtuse since unlike packages, they describe usually a cohort of microservices. Most charts do seem to go overboard with configs that stretch pages unlike my preferred choice docker-compose.yml.

How do you monitor your application?

Assuming it exports at /metrics? At each pod? At the load balancer?

At the pod!

Prometheus in a k8s cluster appears to export a ton of metrics. I wonder which are actually useful.

If Kubernetes declarative YAML was so awesome, how come no upstream app seems to ship it?

There is a fledgling concept called kube

Why does prometheus-operator suck so hard?

All I want is a Prometheus autodiscovery/autoscraping solution whereby I deploy pods

exporting /metrics (my hendry/sla image) and a single prometheus pod can

autodiscover them and scrape them.

https://github.com/helm/charts/tree/master/stable/prometheus-operator is far FAR too heavy. I just want one Prom process. https://github.com/coreos/kube-prometheus is also nuts. I don't need a Highly available {Prometheus,alertmanager} on my cluster... DO I?

Use the simpler k8pods as demonstrated upon https://github.com/kaihendry/sla/blob/master/k8s/prometheus-deployment.yml

How do you hide the /metrics path from being exposed the the public?

Host /metrics on :8081 and keep your app on :8080

How does health check work? /healthz? /ping?

What does SSL?

Consider:

apiVersion: v1

kind: Service

metadata:

name: knote

spec:

selector:

app: knote

ports:

- port: 80

targetPort: 3000

type: LoadBalancer

Now how is one expected to put this upon https://example.com on port 443!?

How do I validate my YAML?

Originally asked on the forum: https://discuss.kubernetes.io/t/week-in-as-full-stack-yaml-developer/8511

A convenient way to check all the values that you could have in the YAML is the API: https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.16/

VSCode has an excellent Kubernetes extension that autocompletes your YAML. I’m not aware of an equivalent for Vim.In Kubernetes, we assume that Docker images are immutable. If you’re pushing the same image with the latest tag, Kubernetes won’t detect the change and you have to trigger a rolling update manually.

If you’re pushing images with tags then Kubernetes will pick up the change automatically and deploy the new version.Services have (usually) 2 ports that are worth remembering:-

portas the port exposed by the ServicetargetPortas the port exposed by the Pods. The port is used by the Service to route the traffic to a Pod.So if you wish to expose a different port on the Service, you can simply change theportfield to 9000.

Is it possible to do a blue/green deployment and not drop a request on a single node?

Yes, create two deployments with Pods that have different labels the service points to the first label, when you’re ready, point the service to the label for the second deployment… blue-green it’s straightforward

Why isn't as simple as updating the image name? labels sounds like pointless bureaucracy

Updating the image of the current deployment executes a rolling update, [which is NOT blue/green]

In a blue green deployment you move all the instances from v1 to v2 in a rolling update v1 and v2 coexist. rolling update -> incremental updates blue-green -> breaking changes

ok let me give you a practical example You built an API that returns the money in your bank account as a number (edited) You later decide that it was a bad idea and you want to change that as “string” if you do a rolling update, i can make 2 calls to the API and I can receive either “int” or “string” this is because while I do the rolling update, the Pods are replaces one-by-one. I could have responses from the current or previous Pods in a blue-green deployment, I have “int” or “string”. the OR is exclusive here

https://learnk8s.io/kubernetes-rollbacks/

Open questions

Node pools only appears to exist in GKE

Why isn't it a native feature of Kubernetes? No tooling seems to be Node pool aware.

Why allow developers to request resources?

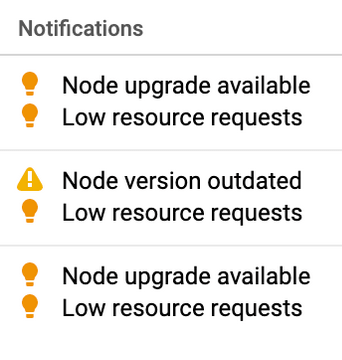

I think it's foolish budgeting exercise for developers to request resources for their application to run. They might conservatively reserve 2GB, whilst 512mb is more than sufficient. This leads to very inefficient pod placement amongst your nodes. Leading to under utilized resources.

Work load Isolation with kubernetes seems poorly defined

Do you have a cluster per project? Do you put all your projects in one production cluster? If you put all your eggs in one basket, what happens when the Kubernetes master node fails? Complete chaos.

I don't like the idea of #devops making poorly document decisions of why certain workloads are isolated in their own clusters, whilst others are grouped together.

Upgrade story

Upgrading and changing the declarative model appears non-deterministic to me. What is the upgrade from say v1.12? Will there by downtime? It all seems to be a dark art.

https://k8s.af/ offers chilling testimony.

General tooling confusion

Is Heapster deprecated?

What tooling should I be using to work out inefficiently placed workloads? Why am I having to do this even?

Credits aka thank you for helping me wrap my head around k8s

Many thanks to @danielepolencic of https://learnk8s.io/nodejs-kubernetes-guide/ and @alexellisuk.